Content verification: A project for photo authenticity in journalism

Reuters, Canon and Starling Lab are working on a groundbreaking project in protecting the authenticity of pictures in journalism. Using the authentication workflows in Fotoware DAM and combined with blockchain technology, they make sure the audience can tell real photos apart from AI-generated images, and thereby preserving trust in photographers.

Generative AI in the news

With the boom in Artificial Intelligence (AI) and the increasing accessibility of such technology, generative AI has become a hot topic and concerns become louder about it posing a threat to the authenticity of the news we consume. Generative AI can generate new content such as images, video, text, music, speech, product designs, and software code at scale, based on natural language queries.

This poses a significant challenge for photojournalists, whose mission is to capture reality in order to inform their audience reliably and objectively. The spreading of AI-generated images and content bears a threat of misinformation and fake news since AI has become so powerful that authentic and generated images are indistinguishable by the bare eye.

The case of the Pope in Balenciaga is only one example of this risk: An AI-fabricated photo of the Pope wearing a white Balenciaga puffer jacket went viral and deceived the masses.

— "Everyone has a need to distinguish whether what they see is real or fake."

Content authenticity in journalism – a proof of concept by Reuters

The image might have been fake, but the impact on the world around us is real. How can we make sure that what we see is genuine? This question was also raised by Reuters, one of the largest news agencies in the world with hundreds of photojournalists around the globe and the mission of informing people from the source in an unbiased manner. Being challenged to protect the trust they have built over decades, they are leaping forward and exploring new technological possibilities.

Together with Canon, a leading global camera manufacturer, and Starling Lab, an academic research lab innovating with the latest cryptographic methods, Reuters develops a workflow to digitally sign photographic metadata:

When a journalist is capturing a picture, a device-specific key is automatically attached to the image’s metadata on this camera. The image will from now on always be accompanied by verifiable data, such as timestamps, locations, and edit history.

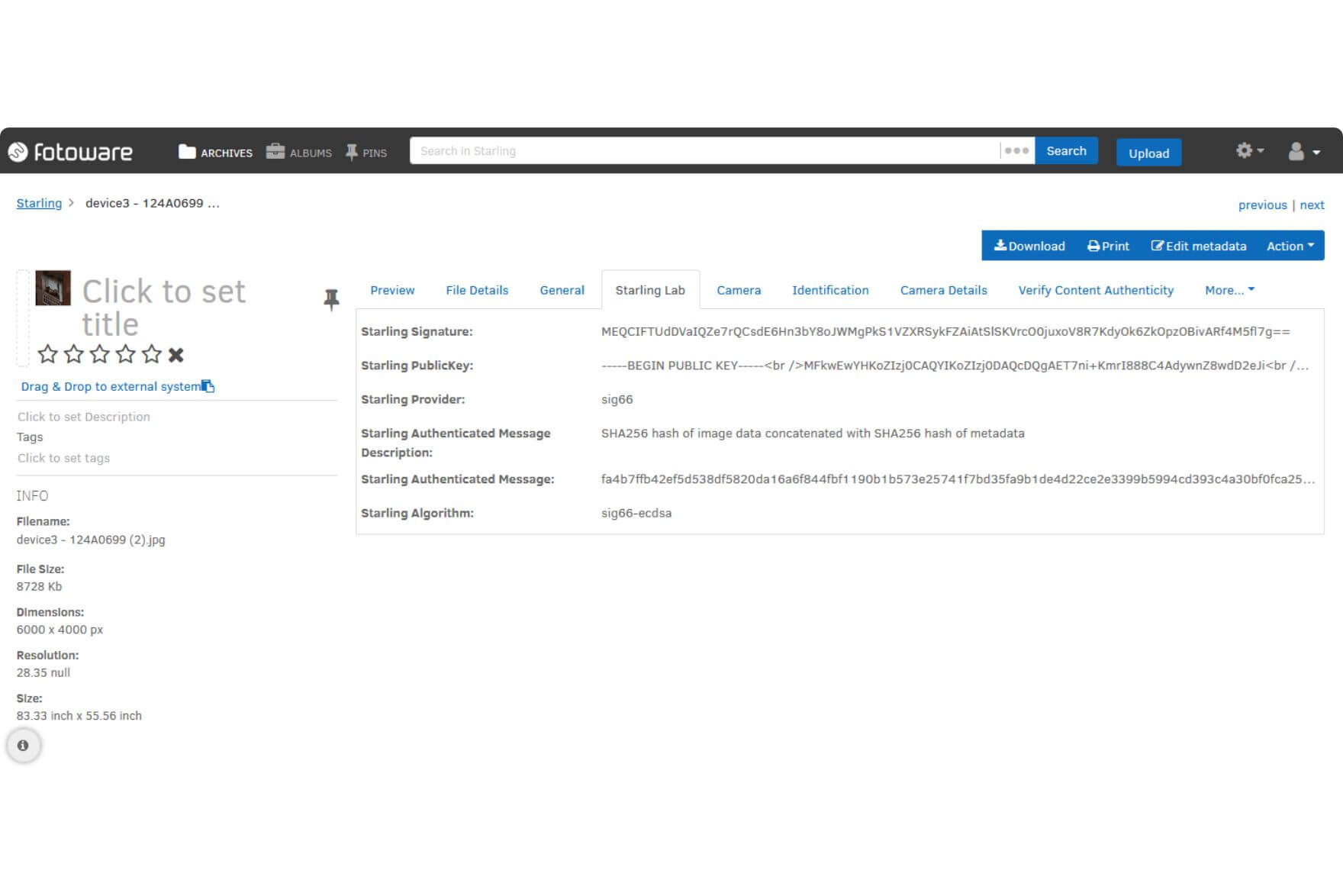

The authenticated image and its metadata are then ingested directly to Reuters’ Fotoware Digital Asset Management system, from where it is then further distributed and, finally, published, always carrying with it its embedded signature. Since this signature is registered onto a blockchain, consumers can validate its authenticity by comparing its hash to the value in the public ledger system.

— "One thing is how we experience it as an audience, but I think the most important thing is to give journalists and photographers, who actually document real events, an opportunity to show that what they do is real. An opportunity to prove that they haven't changed the facts."

Content Credentials reduce fake news

Luckily, the project is not the only one committed to reduce misinformation and fake news and increase the credibility of journalists. Content Credentials – a technology driven by the C2PA standard and the Content Authenticity Initiative – are being implemented by organizations all over the world.

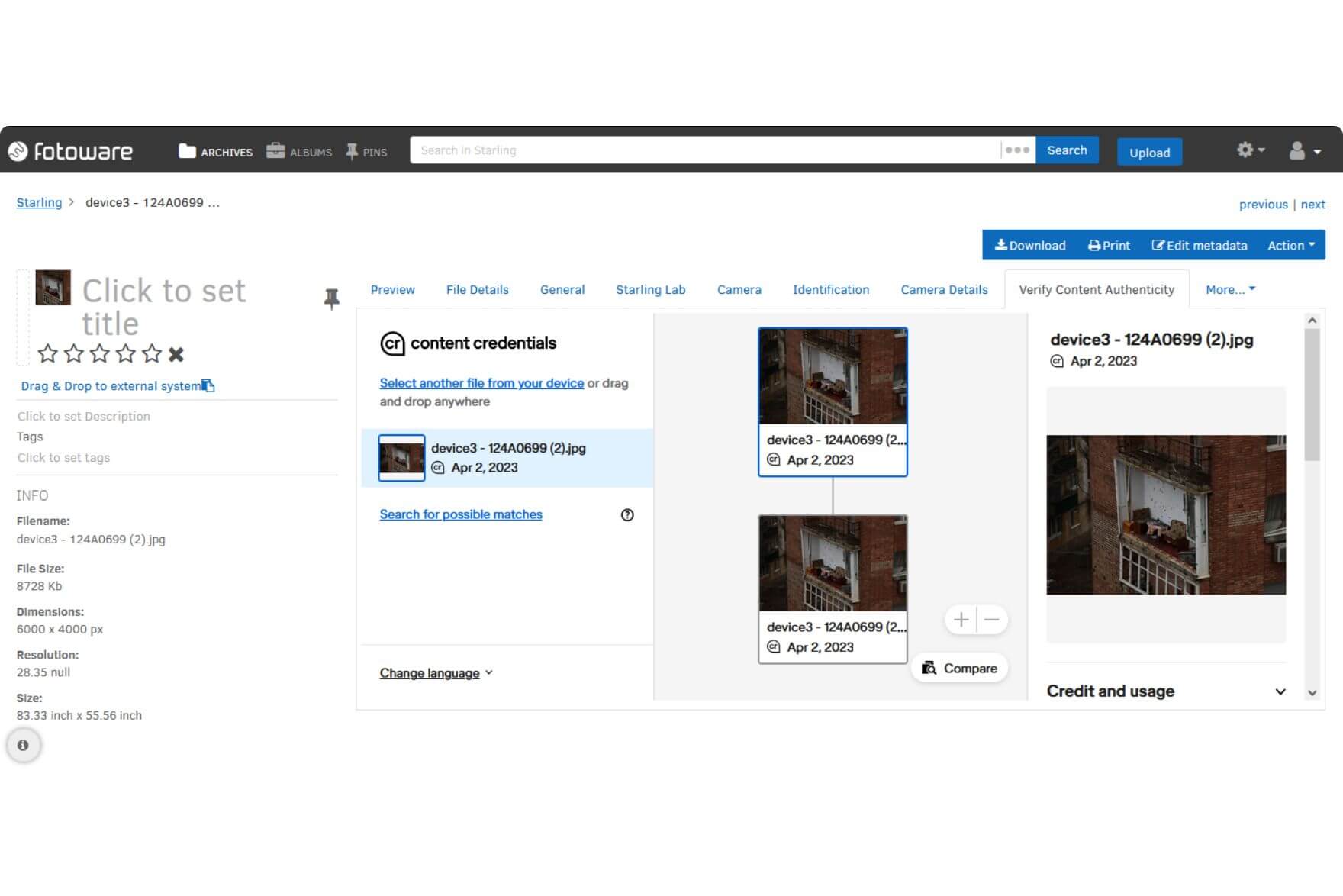

Content Credentials provide proof of every step in an image’s journey – including origin, edits, use of AI, and more – and create a history of all changes made. This information is embedded in the file’s metadata and cannot be tampered with, making it very clear to the viewer if an image has been altered since its creation or not.

Having our roots in journalism, we are proud that Fotoware is a member of the Content Authenticity Initiative and to contribute to Reuter’s monumental project to protect images’ authenticity, preserve trust in photojournalists, and minimize misinformation through fabricated content.